“The great watershed in optimization isn’t between linearity and nonlinearity, but convexity and nonconvexity.” - R.Tyrrell Rockafellar

Convex optimization being a vast topic, the main agenda of these articles is to give you a basic understanding of how it works and we’ll discuss a few methods with examples.

In simple terms, the core of machine learning is minimizing the error or maximizing the distance (error and distance are nothing but functions). Before starting with convex optimization, let’s discuss more on why “Convexity” is stressed in Machine Learning.

Some properties that make convexity very important:

- We can find a minimum point for any convex function/set

- Efficient algorithms have been developed around convexity properties

- Scales well with size of data and no.of variables in a model

- Smoothness of the function helps to be less sensitive to noise in the data

- Interpretability of convex functions is very high

CONVEX OPTIMIZATION:

Let’s break it down by explaining each term.

Optimization:

Optimization is simply choosing an optimal option i.e., one with minimum cost when provided with many options.

General Optimization problems follow below form:

\[\begin{aligned} \Large{\min_{x} \quad} & \Large{f_{0}(x)}\\ \textrm{s.t.} \quad & \small{f_{i}(x) \le b_{i}, \quad i=1,2...,m\\} \end{aligned}\]- \(x = [x_1,...x_n]\)(Optimization/ decision variables)

- \(f_0 : R^n → R\) (Objective function)

- \(f_i : R^n → R\) (Constraints)

Optimal solution $x^∗$(vector) has the smallest value of $f_0(x)$ among all vectors satisfying the constraints.

Ex:

\[\begin{aligned} \Large{\min_{\theta} \quad} & \Large{f_\theta(x,y) : x^2 + y^2 − 4 = 0}\\ \textrm{s.t.} \quad & \small{x,y ≥ 0}\\ \end{aligned}\]

(NOTE: Constraints aren’t mandatory to call it an optimization problem)

Real life examples:

- Data Fitting

- Portfolio Optimization

Tip: Always look for the Variables(V), Objective(O), Constraints(C)

Portfolio Optimization

- V: amount invested in different areas

- O: Overall risk/ return variance

- C: Budget, Investment per asset

Data Fitting

- V: model parameters

- O: Prior info, parameter limits

- C: Accuracy / Precision limit

CONVEXITY:

How do we find out if a function and set given is convex? . What are convex sets/ functions?

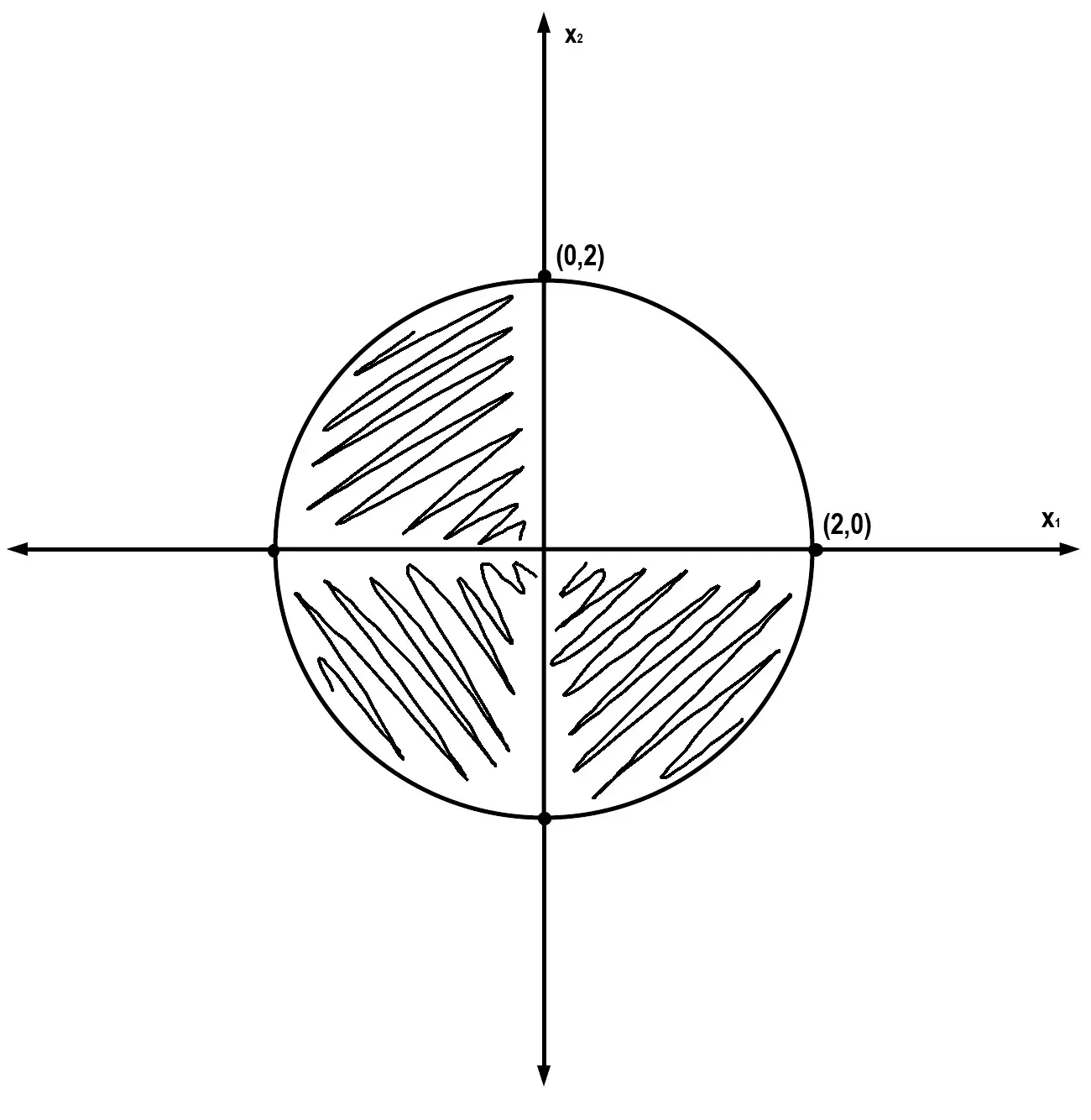

Convex Sets:

A Set(points in space) is said to be a convex set if, for any 2 points inside the set, the line segment joining the 2 points lie completely inside the set.

Points to remember:

• Lines are always convex sets[ (ax=b), (ax { ≤ , ≥ , <,> } b)]

• If 2 sets S1, S2 are convex then S1 ∩ S2 is always convex.

• Norms associated with less than symbol are always convex

• In case of matrix(A), Ax=b is convex, if all the vectors when broken down are convex

Convex Functions:

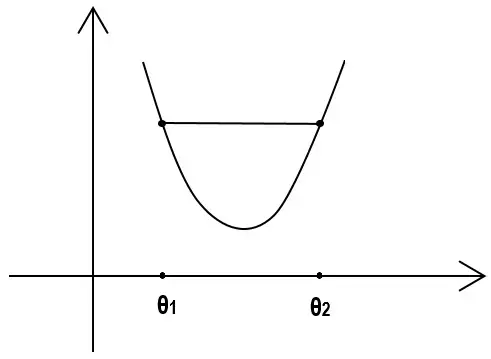

A Functions whose epigraph(region above the function) is a convex set, is a Convex Function.

If there’s a line joining any 2 points in a given function, then all other points of the function between those 2 points should be either on or below the line. (as visible in the picture below, all function values are below line joining $ θ_1 $ & $ θ_2 $ hence it is a convex funcion)

Convex function:

\[\begin{aligned} \Large{\min_{x} \quad} & \Large{f_{0}(x)}\\ \textrm{s.t.} \quad & \small{f_{i}(x) \le b_{i}, \quad i=1,2...,m\\} \end{aligned}\]

4 ways to say if it is a convex function:

- $\frac{∂^2f(θ)}{∂^2θ} ≥ 0$

- Hessian Matrix is Positive Semi-Definitie i.e., it’s eigenvalues $\ge$ 0

- Should satisfy triangular inequality

- Visualize the function and say by looking

If objective function and constraints($=, \le$ ) are convex then it is a convex optimization problem.

Convex Optimization problem is usually in the following form:

\[\begin{aligned} \Large{\min_{x} \quad} & \Large{f_0(x,y) : x^2 + y^2 − 4 = 0}\\ \textrm{s.t.} \quad & \small{x,y ≥ 0}\\ \end{aligned}\]where $ f_0(x),f_1(x) …f_m(x) : \mathbb{R}^n → R $ are convex i.e. if they satisfy(triangular inequality)

\[\Large{f_i(\alpha x + \beta y) ≤ \alpha f_i(x) + \beta f_i(y)}\]where $\forall \space x, y \in \mathbb{R}^n$ and $\forall \space \alpha, \beta \in \mathbb{R}$ with $ \alpha + \beta = 1, \alpha ≥ 0, \beta ≥ 0$

(Note: If there are no constraints and objective function is convex, it’s still Convex Optimization.)

Curious to learn different ways to solve convex optimization problems? See you in Convex Optimization (Part-2)

References:

[1] Convex Optimization by Stephen Boyd

[2] Pattern Recognition and Machine Learning by Christopher Bishop

[3] Convex Optimization Visually explained ? (Youtube)

[4] Convex Optimization(Youtube)